An AI policy template is essential for organizations adopting AI tools or expanding their use. While over 75% of companies have integrated AI into at least one business function, many lack clear policies. This presents risks such as inaccurate outputs, cybersecurity threats, and legal issues related to data and intellectual property.

This article discusses what an AI policy is, the benefits of having clear guidelines for AI usage, and how an AI policy can help maintain compliance with legal and regulatory standards. It also provides guidance on what to include in your AI policy, as well as a free customizable template you can use to create one for your organization.

Want to see how AI fits into day-to-day HR work? Download our AI in HR Cheat Sheet Collection for 10 one-page guides covering tools, use cases, prompts, and practical tips to help you apply AI effectively across your HR function.

Contents

What is an AI policy?

Why implement an AI policy?

What to include in an AI policy

The risks of not having an AI policy

What to include in an AI policy template

Free AI policy template

10 steps to develop and implement a sound AI policy

3 AI policy examples

What is an AI policy?

An AI policy, also known as an AI usage policy or acceptable use policy, is a set of guidelines that outlines how employees should responsibly use AI tools in the workplace. It provides clear instructions on appropriate use cases, data handling practices, and oversight responsibilities.

By establishing these rules, organizations can ensure ethical, secure AI technology use that is aligned with company objectives. This helps mitigate risks related to data privacy, intellectual property, and compliance.

Without a policy, employees are left to make these decisions on their own, which can lead to inconsistent practices or even serious risks like data breaches or discriminatory outcomes. Having a policy in place also builds trust and helps your organization make the most of AI while reducing legal, ethical, and reputational risks.

Why implement an AI policy?

An AI policy tells employees how they should and shouldn’t use AI in their daily tasks. This helps teams across HR, marketing, IT, and finance use AI tools responsibly and consistently. It also supports safe and long-term use. This means avoiding harm, reducing bias, and getting real value from the tools.

Additionally, it defines ethical, legal, and professional boundaries. AI is powerful but risky — it can generate biased content, leak sensitive data, or spread false information. A clear policy guides employees on what’s okay, what’s not, and why it matters. Without this, people may break rules without realizing it or make choices that don’t match their company’s values.

An AI policy clears up confusion about AI tool use by listing which tools are allowed and under what conditions. You can also help your organization avoid legal and reputational problems with a robust AI policy that helps it remain compliant.

What to include in an AI policy

If you have yet to develop an AI policy for your organization, here’s a quick guide on what you should include:

- Purpose and scope: Start with a clear explanation of why and to whom the policy applies. This usually includes all staff, contractors, and any partners using AI tools on behalf of the company.

- Approved tools and use cases: List approved AI tools and their permitted uses. For instance, it may be okay to use generative AI to propose ideas or summarize notes, but not to send client emails or make hiring decisions without review.

- Data privacy and security: Make clear what kind of data staff members can and cannot enter using AI tools. Emphasize the importance of protecting confidential or personally identifiable information.

- Ethical use and bias prevention: Set expectations for fair, non-discriminatory AI use. This is especially important in HR, which may use AI to screen candidates or evaluate performance. Regular audits can help reduce bias and boost transparency.

- Oversight and accountability: Define who is responsible for managing AI use and what employees should do if they encounter problems or uncertainty. Having a clear reporting process helps you spot and address issues early.

- Training and awareness: Offer training so employees understand how to use AI tools effectively, as well as their limitations. This help build confidence and prevent misuse of AI.

- Legal compliance and company values: Explain how the policy aligns with relevant laws (e.g., the EU AI Act or New York’s automated hiring audit laws) and organizational values. The policy should reflect a commitment to ethics, privacy, and inclusion.

The risks of not having an AI policy

Not having an AI policy in place can expose your organization to serious risks, including the following:

- Sensitive data exposure: Staff may unknowingly share confidential company or customer data with external AI platforms, which may store or use it to train models outside your control. This causes serious privacy and compliance concerns.

- Biased, misleading, or inaccurate content: Without proper oversight, AI tools may generate content that reflects hidden biases, factual errors, or problematic assumptions. These outputs can cause real harm.

- Legal issues: AI-generated content can lead to copyright violations, breaches of privacy laws, or the unintentional spread of misinformation. A lack of policy raises the risk of non-compliance, which could lead to costly lawsuits or regulatory penalties.

- Reputational damage: AI tool misuse that results in unethical or discriminatory outcomes can lead to immediate, widespread backlash. Public trust is hard to earn and easy to lose; a clear corporate AI policy helps prevent reputational damage.

- Lack of clarity: A lack of formal guidance leads to inconsistent practices, confusion, and even conflict among departments. Policies remove ambiguity and ensure everyone is working from the same playbook.

Build your skills in developing a robust AI policy

Learn to develop and implement a robust, detailed, well-rounded AI policy that provides clarity, ensures compliance, and protects your organization from risk.

AIHR’s Artificial Intelligence for HR Certificate Program will help you understand the different types of AI, how to develop a future-proof AI strategy and drive responsible adoption and document an AI adoption roadmap to drive impactful results.

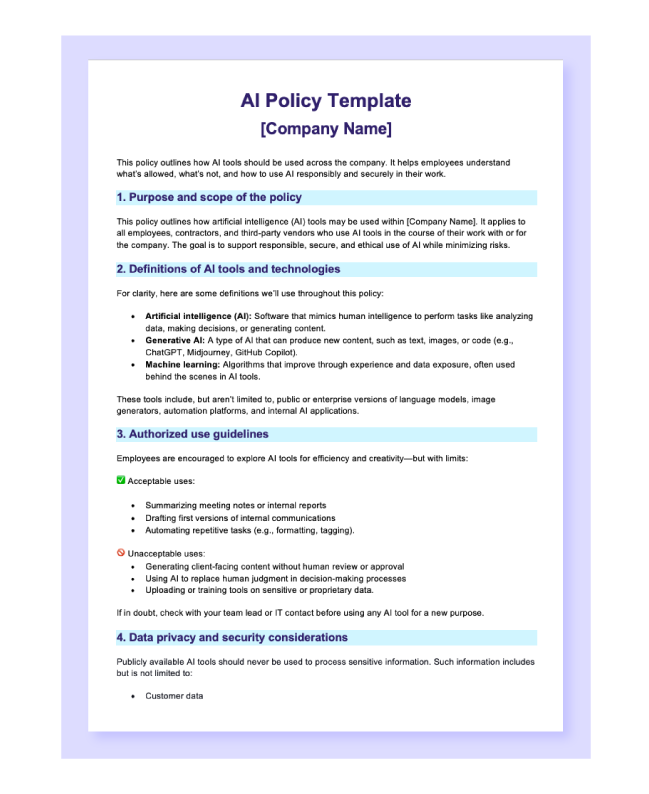

What to include in an AI policy template

Using an AI policy template to help develop your organization’s AI policy can ensure consistency and standardization by making sure you don’t leave out any critical information or sections. Here’s what to include in an AI policy template:

Purpose and scope of the policy

Begin by stating why the policy exists and to whom it applies. This helps set the tone and clarify expectations. Most policies apply to all employees, contractors, and third-party vendors who use AI tools on behalf of the company.

Definitions of AI tools and technologies

Not everyone understands what counts as AI. Define key terms like “artificial intelligence”, “generative AI”, and “machine learning”, and give examples of the tools covered (e.g., ChatGPT, Midjourney, or custom enterprise models).

Authorized use guidelines

Outline how AI tools may and may not be used within the company. This could include task-specific permissions, such as using AI to summarize internal reports but not to generate client proposals without review.

Data privacy and security considerations

Include rules for handling sensitive information. Make it clear that employees should not input confidential data into external platforms. At the same time, you must explain how data must be stored, accessed, and protected.

Ethical use guidelines

Highlight your company’s commitment to ethical AI use. This means avoiding discrimination, respecting intellectual property, and using AI in ways that align with your organization’s values. AI should be used not just efficiently but also ethically, as this shows your company’s commitment to fairness and positive principles.

Human oversight requirements

Emphasize that AI tools should not operate in isolation for critical decisions. Human review is essential, especially in areas like hiring, promotions, or customer communication. While AI can help streamline processes and make your HR function more efficient, it should enhance human intervention, not replace it.

Risk management instructions

Explain how employees should identify and report potential risks or unintended outcomes. Provide contact points and processes for escalation, in line with your company’s broader risk management framework. You can also refer to the AIHR AI Risk Framework for guidance.

Use limitations and prohibited use guidelines

List clearly what employees are not allowed to do with AI tools. This might include generating deceptive content, impersonating others, bypassing legal requirements, or engaging in personal projects on work systems.

Training and awareness directions

Encourage or require training sessions to help teams understand how to use AI safely and effectively. Offer learning resources and highlight where employees can go for help or clarification.

Policy review and update process

AI is evolving fast, and your policy should, too. Set regular intervals for reviewing and updating your AI usage policy, and include a mechanism for gathering employee feedback to keep the policy practical and relevant.

Free AI policy template

To make it easier for you to get started, AIHR has created a free, customizable AI policy template to help guide your own AI policy development. Download the template below.

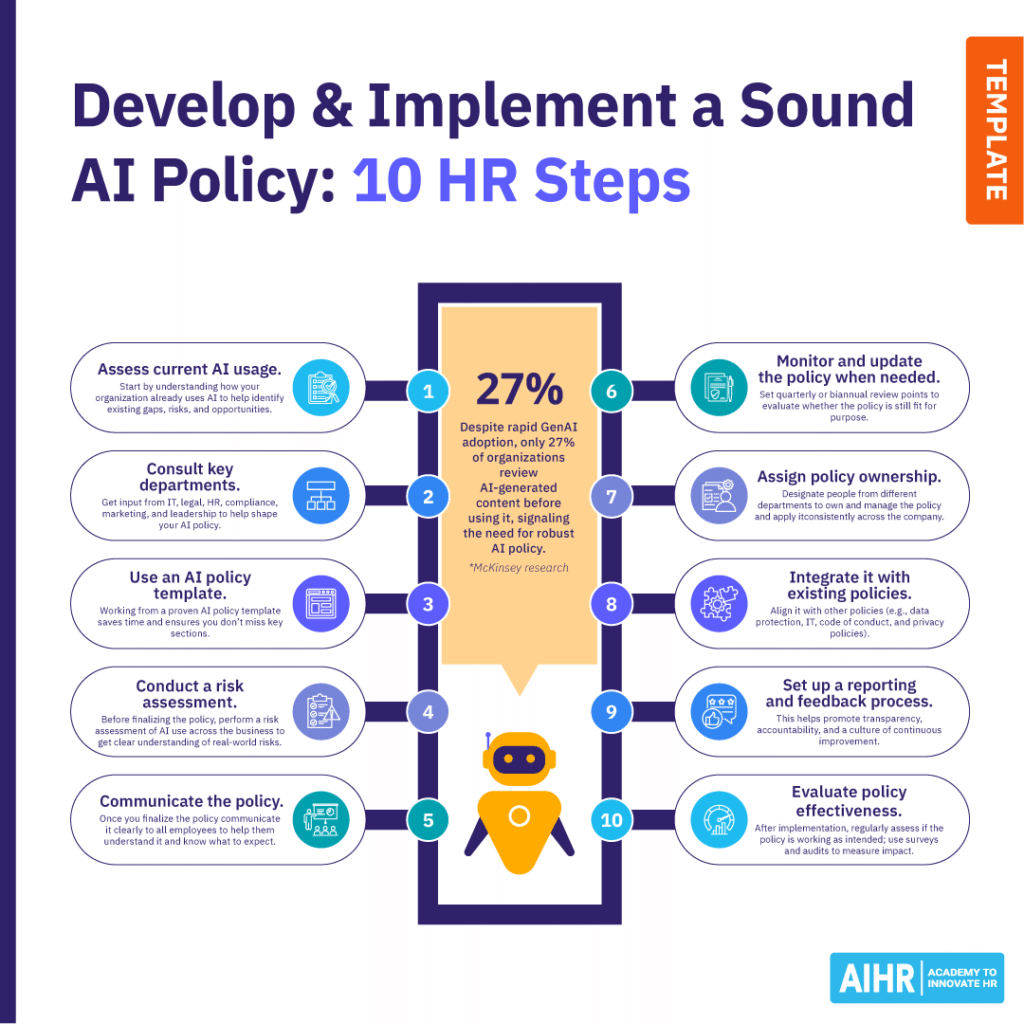

10 steps to develop and implement a sound AI policy

Here are 10 steps to follow to ensure you create and roll out an effective AI policy:

Step 1: Assess current AI usage

Start by understanding how your organization is already using AI. Which teams are using tools like ChatGPT or Midjourney, and what tasks are AI helping to automate? This helps identify existing gaps, risks, and opportunities. It also sets a baseline for developing a policy that reflects actual business needs.

Step 2: Consult key departments

An AI acceptable use policy outlines the dos and don’ts for employees interacting with AI tools. This typically covers standard company practices on protecting institutional, personal, and proprietary data in the context of AI tool usage. This prevents employees from sharing confidential data on AI platforms.

Step 3: Use an AI policy template

An AI policy template can help save time and ensure you don’t miss key sections. Use it to define your policy’s purpose, clarify terms, set boundaries for use, and build in ethical, legal, and data protection measures. This also helps ensure consistency across departments.

Step 4: Conduct a risk assessment

Before finalizing your policy, perform a formal risk assessment of AI use across the business. This includes looking at potential legal, ethical, reputational, and cybersecurity risks. The AIHR AI Risk Framework can guide this process with a clear understanding of real-world risks.

Step 5: Communicate the policy

Once you finalize the policy, communicate it clearly to all employees. Explain why it matters, how it protects the company and the individual, and what they can expect. Offer training or Q&A sessions to ensure people feel confident using AI tools within the new framework.

Step 6: Monitor and update regularly

AI is evolving fast, and so should your policy. Set regular quarterly or biannual review points to evaluate whether the policy is still fit for purpose. Monitor how AI is being used, gather feedback, and adjust your policy as needed to keep up with technological, legal, and workplace changes.

Step 7: Assign policy ownership

Designate one or more people (e.g., from HR, legal, or compliance) to own and manage the policy. This person or team should be responsible for answering questions, overseeing updates, and ensuring the policy is applied consistently across the company.

Step 8: Integrate it with existing policies

Align the AI policy with other policies, such as your organization’s data protection policy, IT policy, employee code of conduct, and privacy agreements. Integration ensures consistency and helps your company avoid contradictions.

Step 9: Set up a reporting and feedback process

Employees must have a simple way to report issues, ask questions, or flag potential misuse of AI tools. This helps promote transparency, accountability, and a culture of continuous improvement. Feedback also helps inform policy updates.

Step 10: Evaluate policy effectiveness

After implementation, regularly assess if the policy is working as intended. Are teams following it? Are they using AI tools appropriately? Use surveys, audits, and performance reviews to measure impact and identify areas for improvement.

3 AI policy examples

Here are three real-life examples of AI policies companies use for different AI-related purposes:

Example 1: AI acceptable use policy

An AI-acceptable use policy outlines the dos and don’ts for employees interacting with AI tools. For instance, the University of Texas provides guidelines on protecting institutional, personal, and proprietary information when using AI tools. It advises against entering confidential data into an AI platform and emphasizes following data protection standards.

Example 2: Generative AI (GenAI) usage policy

A GenAI usage policy focuses on tools that produce content. Fisher Phillips offers a sample policy addressing the use of third-party generative AI tools like ChatGPT and DALL·E 2. It advises employees to verify the accuracy of AI-generated content, avoid using such tools for employment decisions, and refrain from sharing confidential or proprietary information.

Example 3: Corporate AI policy

A corporate AI policy provides a comprehensive framework for AI integration. Pfizer focuses on transparency, ensuring users understand AI systems, and upholds stringent data privacy standards to protect patient information. It maintains accountability by ensuring AI applications meet ethical, legal, and regulatory standards, with appropriate human oversight.

To sum up

The widespread availability and user-friendly nature of AI tools have blurred the lines between professional and personal use. Employees may integrate these technologies into their daily workflows without fully understanding the security implications. Without clear guidelines, organizations risk data breaches, compliance violations, and ethical dilemmas.

Implementing a structured AI policy is essential to defining acceptable use cases, specifying approved tools, and outlining data handling procedures. This policy should also establish a process for evaluating new AI solutions, assigning accountability, and aligning with corporate strategy and regulatory requirements.